This blog post only exists to prevent expired linking. My blog has moved to: http://pabloaizpiri.com/

--------------------------------------------------------------------------------

Programming Love & 3D Engines

I have an admission to make. My true love of programming comes from game development. Sure, I love a good web app- but honestly I just

love cool technology. Networking is cool. Database internals are cool. But what is a cooler technology than a

3D Engine? now that's

awesome!

3D Engine and games are intriguing to me because of the technical challenges that must be addressed. Most business application address some in one way or another, but usually 3D Engines have to address all of them and be very good. Sorting? Check. Searching? Check. Drawing? Check. Complex Math? Check. AI? Sound? Hardware? Ect.. Additionally, a good 3D engine has to not only implement complex algorithms, but requires a strong architecture to manage its complexity while remaining efficient and still allowing great power. It needs to be a database, (of triangles, essentially), constantly read input, perform all sorts of logic and all these things must come together seamlessly to create a great game.

How I Started

My first experience was with

LEGOMINSTORMS when I was about 12, I programmed the RCX (2.0) for cool little game with a light sensor and a moving piece of paper. Next,

GameMaker, when I was 13- then

3D RAD,

A5 Game Studio Engine, and finally

Blitz3D. Over the last couple years I've wanted to skip all the middle ware and write a 3D engine using OpenGL or DirectX. At one point I wrote a very simple software render, but that was about as close as it got... I always had a hard time picking up DirectX or OpenGL- it seemed like SO MUCH was thrown at you just to draw a triangle... and that was fine, except I wanted to know what all that code did. On top of that I'm not super proficient in C++ which most tutorials are in.

At Last: Beginning DirectX

One weekend while I was visiting a friend in Houston, he convinced me to buy an

old book he knew I was interested in. (Read it the whole time we were at at Half Price Books). It was on writing a managed 3D engine- just what I wanted! I slowly started on it and I actually started making progress. However, it wasn't long before I realized I was working with

Managed DirectX 9, a boat that had sunk and MS had abandoned- but not before I had made some good progress. Encouraged by the progress, I decided I'd push forward and try once again tackling DirectX through a managed interface or library like

SlimDX. I had tried to use it in the past, but it had never worked out well, but in my search I also found

SharpDX- which is essentially a library of "extern" C# calls to the DirectX API. I figured I might as well stay as close as possible, since most DirectX tutorials are for C++ which call the same API. Another major reason I chose it was because of

SharpDX's performance.

This turned out to be pretty great. The DirectX API is actually starting to make a lot more sense now, and I feel I could also even jump straight into C++, however, for my needs performance is already overkill- and since I can develop much faster in C# with the familiarity of the .NET framework, I'm going to stick to that. I've actually have gone far enough where I've implemented a simple object management system (for moving entities around relatively) and built a import function for MQO models. (Still limited though) MQO is the format for the 3D Modeller

Metasequoia. I know it is strange and probably obscure, little-known modeler- but I found it a few years ago through the

FMS website and have found it to be an absolutely EXCELLENT simple,

easy to use, and free 3D modeller. I LOVE it!

Credit Where Credit Is Due

I have to definitely give credit to the following sources for getting me where I am right now.

Introduction to 3D Game Engine Design Using DirectX 9 and C# - This was the book that helped to somehow "flip the switch" and help DirectX make sense after reading and coding only a couple chapters... (I skimmed through 3-4 of the others and never read the rest)

http://www.two-kings.de/ - Some help with the clear explanation/tutorials

http://zophusx.byethost11.com/tutorial.php?lan=dx9&num=0 - HUGE help. This guy does through DETAIL so that I could understand. Heavily considered switching to C++....

http://www.toymaker.info/Games/html/lighting.html - Helped my understanding of shaders.

http://www.rastertek.com/tutindex.html - Helped a LOT in understanding and writing shaders for multi-texturing and special effects.

And of course

Wikipedia... (

if you haven't donated, but you use it, you should!) and the DirectX Documentation. It's MUCH easier to read it now that I've grasped the major concepts though I undoubtedly have quite a bit to go. And finally thank God for the Internet and search engines... if you're persistent you'll find what you need.

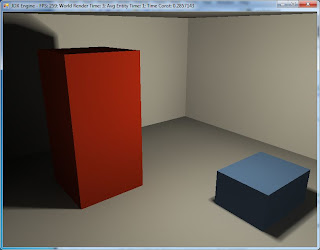

The JDX Engine

So that's my newest passion- building this managed 3D engine- I couldn't really think of a name and finally settled with "JDX". When I've made a fair amount of progress, I actually want to build a

Recoil clone (I played this game when I was 13 and I have

fond memories of it), since that would be easy to do and not require much artistic skills. If it turns out well, I'd love to also make this 3D engine public for anyone who wants to be able to write a managed DirectX 3D game without being a DirectX expert. It will probably be fashioned somewhat after Blitz3D API, since I've always found it to be extremely intuitive.

I'll probably give updates on JDX here and there when I can. Mostly I'm teaching myself, so I'm making a lot of mistakes. I'm very open to learning how to actually write an efficient and good D3D code base since a lot of examples *work* but there seems to be many different ways to do things in D3D and I want to not just perform the task but do so efficiently.

Which brings me to one of my new major pet peeves: in most tutorials there seems to be very little out there as to how to actually best write the code. (E.g. do you store a vertex buffer for each object in your world or do you attempt storing them all in the same buffer? What about object parts? How do you apply the correct shaders when you do so? Ect...)

Until next time....

UPDATE: Bought another couple books from amazon on 3D Mathematics and DirectX 10; it's helping lots. Engine is coming along well... here are some updates.